An Ocean of Models

Will we see a proliferation of AI models?

In the classic Sherlock Holmes short story “The Adventure of the Bruce Partington Plans” (originally published in 1908), Sherlock is explaining to Dr. Watson, what a great mind his brother Mycroft is.

“We will suppose that a minister needs information as to a point which involves the Navy, India, Canada and the bimetallic question; he could get his separate advices from various departments upon each, but only Mycroft can focus them all, and say offhand how each factor would affect the other. They began by using him as a short-cut, a convenience; now he has made himself an essential”

Mycroft is able to get inputs from various departments, weigh the pros and cons of each department’s inputs and then come up with an overall higher level recommendation. The story does not lay this out, but it is feasible, they got inputs from multiple teams within each department, which were considered before making the recommendation at the department level and sent to Mycroft.

An ocean of models

AI models have been getting better and better every year.

For example, we have all seen data on how newer Generative AI models have done better and better in terms of acing aptitude tests. Their scores have been going higher and higher. (It is not clear whether this is the right way to test the strength of a model). Many of the generative AI models now have added multi-modal capabilities, which include images, video, and some audio.

AI models have evolved leaps and bounds, especially on the computer vision side (relevant to agriculture) with segmentation, and object detection models. Segmentation and object detection models are key to solving many of the computer vision related challenges like autonomy, weed detection, sense and act, etc. in agriculture.

(For reference, segmentation divides an image into regions and assigns labels to each pixel. Segmentation provides detailed information about object boundaries and regions. Object detection identifies specific objects in an image or video and classifies them. Object detection focuses on finding the position and boundaries of objects.)

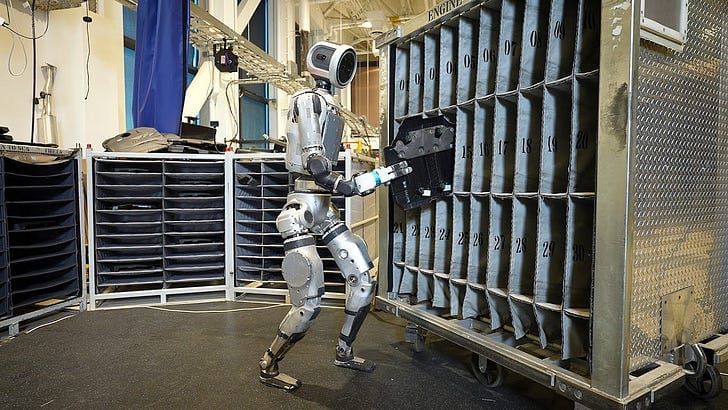

We have seen improvements in what robots can do physically compared to a few years ago. For example, Boston Dynamics’ humanoid robot could do push-ups in April, and now it can do work in a demo space, by moving engine parts between bins.

Side note: Boston Dynamics - when can we get these humanoids to clean up our houses??? In the future, parents will complain to their kids for not assigning a room cleaning task to their humanoid robot, instead of complaining about their kid not having made their bed or cleaned their room.

One might think these general purpose models from one of these tech companies will keep getting better and better, and businesses can use these models to solve some of their pressing technology challenges.

Is it possible to do so?

If we draw an analogy with an NFL team (or any sports team), players put in a lot of effort to excel along a handful of dimensions which are important to win an NFL game. When they excel along a handful of dimensions, they do not excel along other dimensions. For humans, it makes sense as there are limitations on time, effort, and physical attributes needed to excel along different dimensions. For example, a center in football will find it challenging to be a wide receiver.

AI models have fewer limitations. If enough data is given to a model along multiple dimensions, it could in theory be good at multiple dimensions. The extreme examples are the large language and multimodal models like Gemini from Google, and ChatGPT from OpenAI.

Why might businesses run multiple models?

Businesses build AI models to solve certain problems. The model is a tool designed to address certain specific pain points. Organizations will use different types of models to solve different types of issues.

When an enterprise deploys a software solution, including AI or generative AI models, there are several prerequisites for enterprise software, like compatibility with the company’s IT infrastructure, security certificates, logging, and documentation.

Understanding context and company or industry specific terminology

The vocabulary and context associated with a particular industry is different from other industries. General purpose foundation models can pick up the industry context and terminology but they will have a much harder time understanding the context and terminology used by a specific company. There is a ton of tribal knowledge inside every company, and some of it is available in internal documents, memos, presentations etc. and is undocumented in people’s heads.

We have seen examples of AI models relying on industry context coming through a knowledge graph to provide better results. The AI models are fine tuned to understand the industry and company context to provide better results.

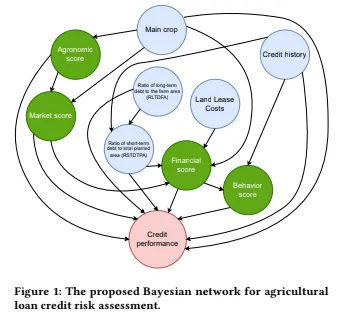

For example, Traive finance is using a knowledge graph based on how loan credit risk gets impacted based on different factors as a guide and inputs to an AI model. (See SFTW post “Scaling Innovation: Traive Finance”)

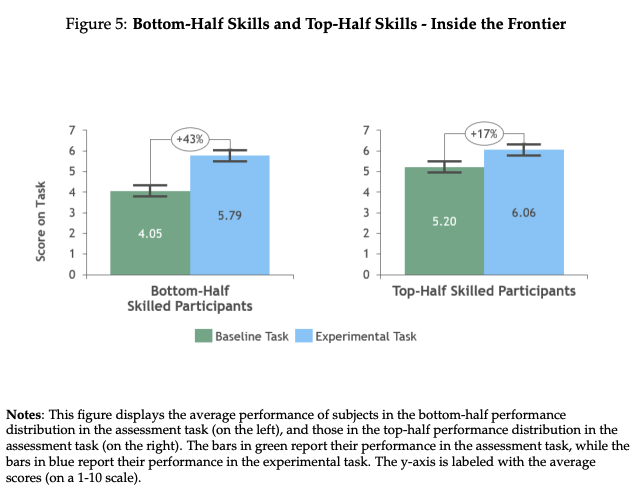

“Figure 1 shows the specific network built by TrAIve. If you look closely, there are 8 arrows going into the final credit performance circle, indicating all of those 8 variables have an impact on the credit performance.

TrAIve is able to pull data from publicly available sources, and rely on experts for domain expertise. They can also understand which experts are the “real experts” and lean into their priors. Experts are particularly good at sub-domains.

It helps TrAIve stitch together a big library of models, which take into account various data elements like weather, satellite, supply chain infrastructure, supply & demand, etc. It helps TrAIve create their own underlying data dictionary and ontology, with their domain expertise.”

The UIUC recently published a paper, using a Knowledge Graph based approach combined with machine learning techniques to estimate GHG emissions at the farm level with a high degree of accuracy.

Unlike traditional model-data fusion approaches, we developed KGML-Ag as a new way to bring together the power of sensing data, domain knowledge and artificial intelligence techniques. AI plays a critical role in realizing our ambitious goals to quantify every field’s carbon emission.

Access to data and security

Businesses will want to control access to data and the intelligence associated with the data managed carefully and clearly. For example, any data related to HR will be off-limits for non-HR staff and so it makes sense to have any models running on HR data separate from any other models running on non-HR data. Organizations will be extremely careful to not inadvertently leak sensitive information such as personal identifiable information.

Businesses might want to keep data from different customers to be separate from each other and do not want an AI model working with one customer to have access to data from another customer.

Reasoning in decision making

Many of the workflow and business decisions are fairly complicated and they are based on inputs and analysis from multiple sources. For example, a product placement decision for seed is a fairly complicated decision, which needs inputs from multiple sources. An agronomist will typically weigh the pros and cons based on different inputs, which can include soil test results, weather information, past history, yield maps, product availability etc. before recommending the best course of action.

A set of AI models could work in a similar way, with each AI model specializing in a particular area. These different AI models can then provide their inputs to a reasoning or orchestration model, which can consider the pros and cons of the outputs of each of these models to make a final recommendation. The reasoning model can be optimized to reason across a variety of inputs, which in turn are outputs of other AI models.

Execution models

So far most AI models within agriculture have been around analysis and decision support.

The AI model will give you a product placement recommendation, the AI model will provide a weather forecast etc.

AI models which take action have been mostly run on the edge for operations like weeding, sense and act, vehicle autonomy, and automation etc. These models are inherently different from models being run in the cloud. The edge models are optimized to perform well within the hardware and operating environment constraints.

In the future, as we get to execution models to take actions like place an order, or dispatch a team of humans to take care of a specific issue, models will be optimized for accuracy, speed of execution and managing audit trails, while trying to minimize risk. How each organization tunes these models will depend on the strategy and philosophy of the organization in terms of execution risk.

Policy and Audit Models

Different organizations have different policies, their sensitivity to public relations issues, or legal exposure can be different. For example, when I worked at Amazon, it was a policy to not to ask a question to a customer, if you could find the answer without asking the customer.

Any customer communication is often reviewed multiple times for brand voice, legal and PR issues, before it goes out. This is especially true for larger organizations who have a lower risk appetite on issues of legal or PR nature. One can easily imagine policy and audit models, which are independent of the recommendation and analysis model. A policy or audit model is trained on organization specific policies, principles, and control structures.

The policy or audit model can be run in parallel to or after the recommendation and analysis model has provided its recommendations. The policy or audit model can check for compliance with processes, flag any potential risks, etc. It makes sense to keep such a model separate from the recommendation model to preserve lines of control, accountability, responsibility, and identify areas of improvement quickly and efficiently.

Key Takeaways

So what are the key takeaways for agribusiness and AI based startups in agriculture?

Agribusiness

An AI or a GenAI model at the end of the day is a tool to solve a particular problem.

As an agribusiness, you have access to a lot of proprietary data. Building models is not what will give you a competitive edge.

It is about how to use the data and the knowledge you have about your own company, your own customers, and your own business strategy, and how you can build a set of models to help you execute on it most effectively.

Organizations will have to get better at managing a large number of models (also known as agents).

Today it is difficult for two pieces of software to interact with each other. For example, today your accounting software has a tough time talking with your customer service software. This will change in the future. With the interfaces between software being able to understand natural language, it will be much easier for different software to interact with each other.

Organizations will have to do a much better job of managing access control, security, and data governance. They will have to get much better at orchestrating and managing their AI models and agents. They will have to experiment with different models having different responsibilities to better understand the locus of decision recommendations and control. They will have to think creatively on how to manage the interaction paradigms between different models (agents) which represents the strategy, tactics, brand, and ethos of the company.

Employees often use internal tools to do their job efficiently and oftentimes these internal tools do not meet the mark in terms of what the employee wants. As the tools to create models become easier, we will see a trend of more and more employees creating their own private AI or GenAI models based on the data they have access to to make their work easier. Agribusinesses will have to manage this process to provide the flexibility to employees, while at the same time keeping true to its principles, strategy, and policies.

Startups building AI models

You can move fast to help build AI models.

But you need access to high quality data, which is typically available with agribusiness, (and it is a new data sparse area). Your moat will not be due to your ability to build these models. Startups which are building AI based products for agribusiness will have to realize their models could be part of a larger continuous workflow at the agribusiness.

Startups will have to think about the surrounding tools and infrastructure needed to make their AI enabled product be a part of the overall workflow for their customer to create stickiness. Startups with models which can integrate easily with their customer’s workflow, will have a better shot of providing a sticky product to their customer.

More than a 100 years ago, Mycroft Holmes was ahead of this time, as he was a reasoning model in human form! Startups and agribusinesses which enable Mycroft type AI agents, will have a better shot of getting the most value out of AI.